What Noam Chomsky gets wrong about AI

Einstein and ‘ML Einstein’ facing off (AI generated on lexica.art)

In a recent article published in The New York Times by Noam Chomsky, Ian Roberts, and Jeffrey Watumull, the authors made crucial errors regarding Large Language Models (LLMs) and failed to focus on the right questions about them.

In general, I think the misconceptions in the article are pretty common, and limit useful discourse around language models. The sooner we can dispel some of them, the better the field will be, so I thought I’d take an attempt at briefly addressing some errors.

I found three specific claims in the article particularly striking and will explain why they are incorrect below.

The claims in question are:

- The human brain is and will remain fundamentally much more sample efficient than ML models like ChatGPT

- The measure of human intelligence is the ability to apply the scientific method

- The authors claim to have found specific examples of ML model failure. I demonstrate how models actually succeed in both

Claim 1: The human brain is and will remain fundamentally much more sample efficient than ML models like ChatGPT

The authors claim that machine learning models like ChatGPT are fundamentally less sample efficient than the human brain, but they fail to provide a set of figures to contextualize this claim. While humans consume less data than some of these large machine learning models, the models usually offer significantly more breadth of capabilities than humans.

In fact, the difference is much smaller than implied in the text. Stable diffusion was trained on a dataset which contains as many images as a human sees in 3 months through the course of normal life, and composes images significantly better than most of us. ChatGPT was trained on two orders of magnitude more texts than an average American reads during their lifetime. It’s also much more proficient in a variety of domains than humans.

Finally, as LLM training techniques have improved, they’ve started leveraging high-quality instructions for fine-tuning which are minuscule datasets in comparison to the original training data, showing that sample efficiency can improve tremendously with better methods.

Given all of this, it isn’t clear at all that human learning is quantitatively more efficient than current ML methods, and while human learning has been optimized over hundreds of thousands of years, we are still discovering algorithmic improvements to machine learning every month. So much for the human mind’s efficiency.

Claim 2: The measure of human intelligence is the ability to apply the scientific method

The article sets an unrealistic benchmark for measuring the intelligence of current machine learning models by comparing their outputs to the body of work produced by Albert Einstein. The authors essentially argue that the ability to apply the scientific method is “the most critical capacity of any intelligence,” using the theory of gravity as an example.

This benchmark is unrealistic to say the least. The concept of the scientific method itself was first codified in the 20th century, long after billions of humans had already lived. While having an AI contribute to science is an important milestone, it should not be used as the sole benchmark for defining “human intelligence.”

Today’s models are able to translate between languages better than most humans, retrieve scientific knowledge (mostly) accurately, and understand, summarize and answer questions about complex texts. Sure, they aren’t Karl Popper, but that doesn’t mean they don’t exhibit intelligence.

More generally, a lot gets lost when specifically trying to dissect which aspects of “intelligence” models exhibit, and which they lack. A better measure of progress is to design empirical tasks and to continuously measure progress against them. When doing so, we can see that the field has made tremendous progress in some areas like language understanding, and comparatively less in others such as manipulation of physical objects.

Claim 3: Models fail on specific examples due to their lack of language understanding

Towards the end of the article, the authors finally provide some falsifiable claims for their arguments, which they could have immediately falsified if they carried on a bit more due diligence.

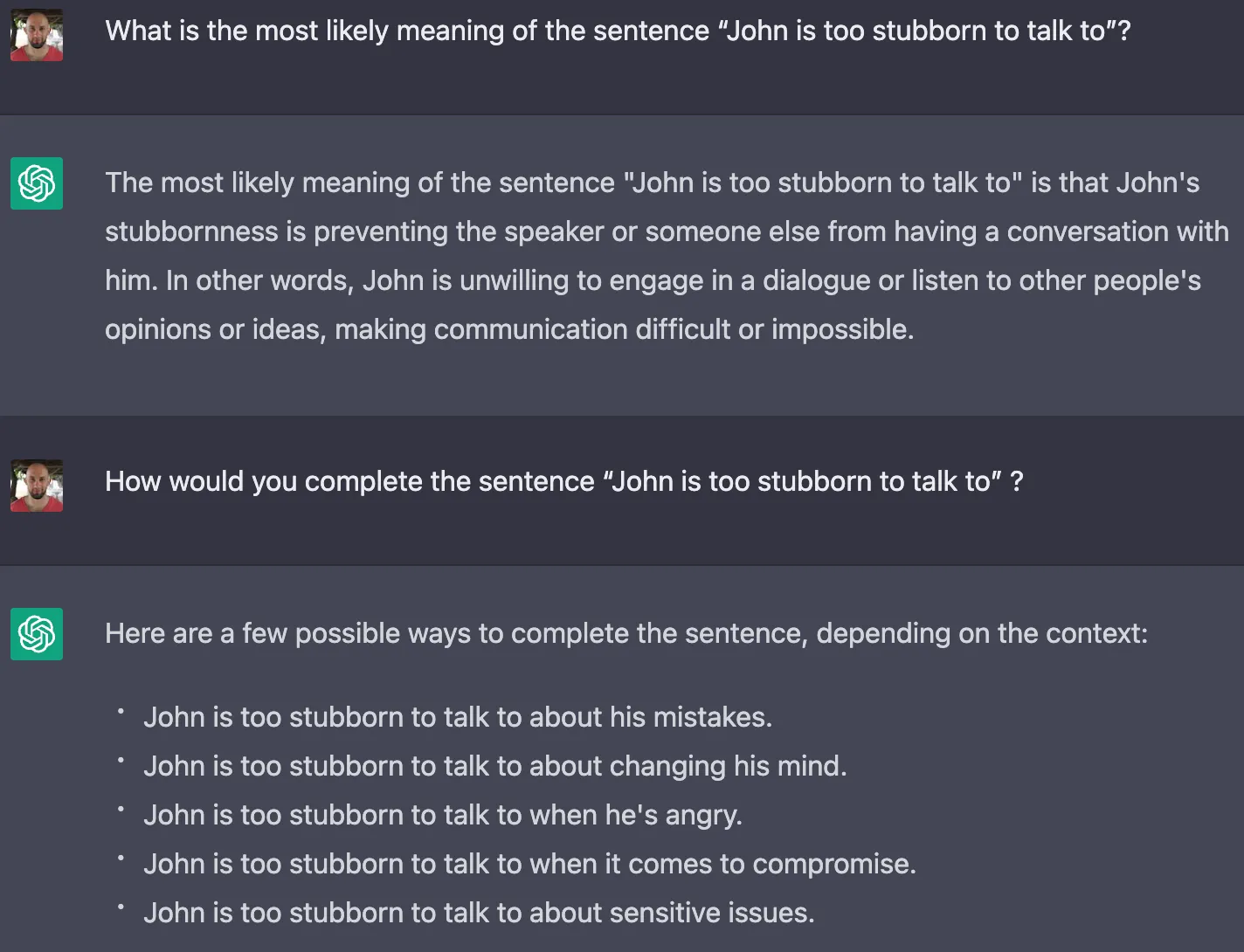

The article claims that machine learning models like ChatGPT fail to properly parse and understand certain phrases, such as “John is too stubborn to talk to.” However, empirical testing of modern language models shows that they are capable of understanding and parsing this phrase with ease.

ChatGPT isn’t fooled by the question in the article. Neither were other models I tried

ChatGPT isn’t fooled by the question in the article. Neither were other models I tried The authors also prompt ChatGPT to take a moral stance, which it refuses to do. However, this is not an inherent limitation of the model, but rather a result of its final phase of training, Reinforcement Learning from Human Feedback phase. Models can be trained to espouse arbitrary moral stances, as has been shown over the course of ChatGPT’s deployment as its answers to political questions changed as further fine-tuning was performed. Instead of demonstrating the inability to “balance creativity with constraint,” this experiment shows the opposite: a model can be trained to generate creative answers to a myriad of questions, while being constrained in its answers in some domains (morality in this example).

Conclusion

There are a lot of other issues in the piece, and overall it felt very reminiscent of speeches that some teachers gave when Wikipedia gained in popularity, describing it as categorically inferior to alternatives.

Criticizing a novel tool like ChatGPT won’t stunt its growth if people find it useful, and large language models are being used more and more every day. These models are fantastically useful and have significant potential to contribute to society, and we must address the concerns surrounding their use in a responsible way.

But in my opinion, this criticism should be based on empirical results and on concrete attributes of the technology at hand, rather than on hypotheses about the true nature of intelligence. As language models continue to evolve and improve, I hope that the discourse around their capabilities and limitations continues to improve.