Always start with a stupid model, no exceptions.

To build General AI, you must first master Logistic Regression

Let’s start with the basics

When trying to develop a scientific understanding of the world, most fields start with broad strokes before exploring important details. In Physics for example, we start with simple models (Newtonian physics) and progressively dive into more complex ones (Relativity) as we learn which of our initial assumptions were wrong. This allows us to solve problems efficiently, by reasoning at the simplest useful level.

Everything should be made as simple as possible, but not simpler. -Albert Einstein

The exact same approach of starting with a very simple model can be applied to machine learning engineering, and it usually proves very valuable. In fact, after seeing hundreds of projects go from ideation to finished products at Insight, we found that starting with a simple model as a baseline consistently led to a better end product.

When tackling complex problems, simple solutions, like the baselines we’ll describe below, have many flaws:

- They sometimes ignore important aspects of the input. For example, simple models often ignore the order of words in a sentence or the correlations between variables.

- They have a limited ability to produce nuanced outputs. Most simple models may need to be coupled with heuristics or hand-crafted rules before being put in front of a customer.

- They are not as fun to work with, and might not get you the cutting-edge research experience you are looking to develop.

The misguided output often produced by these solutions can appear very silly to us, hence the title stupid models. However, as we describe in this post, they provide an incredibly useful first step, and allow us to understand our problem better in order to inform us on the best way to approach it. In the words of George E. P. Box:

All models are wrong, but some are useful.

In other words: if you want to play with fun toys, start with a complex model. If you want to solve problems and build products, start with a stupid one.

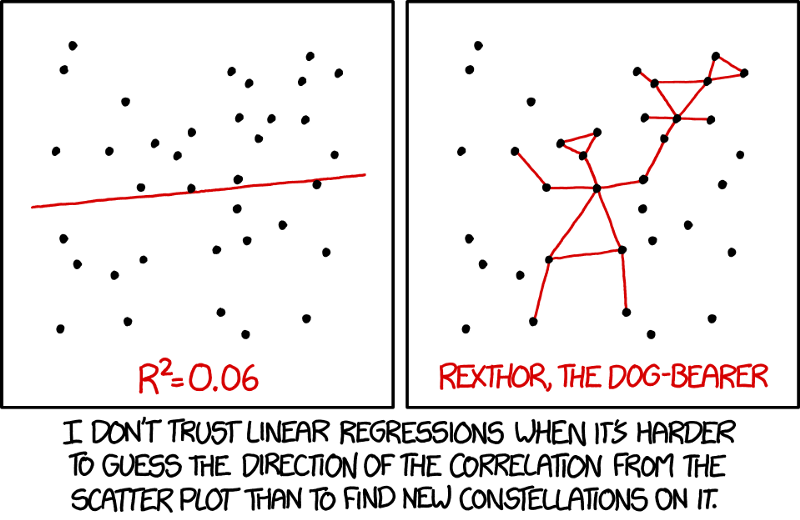

What is a baseline?

Different distributions require different baselines (source)

Fundamentally, a baseline is a model that is both simple to set up and has a reasonable chance of providing decent results. Experimenting with them is usually quick and low cost, since implementations are widely available in popular packages.

Here are a few baselines that can be useful to consider:

- Linear Regression. A solid first approach for predicting continuous values (prices, age, …) from a set of features.

- Logistic Regression. When trying to classify structured data or natural language, logistic regressions will usually give you quick, solid results.

- Gradient Boosted Trees. A Kaggle classic! For time series predictions and general structured data, it is hard to beat Gradient Boosted Trees. While slightly harder to interpret than other baselines, they usually perform very well.

- Simple Convolutional Architecture. Fine tuning VGG or re-training some variant of a U-net is usually a great start for most image classification, detection, or segmentation problems.

- And many more!

Your choice of a simple, baseline model depends on the kind of data you are working with and the kind of task you are targeting. A linear regression makes sense if you are trying to predict house prices from various characteristics (predicting a value from a set of features), but not if you are trying to build a speech-to-text algorithm. In order to choose the best baseline, it is useful to think of what you are hoping to gain by using one.

Why start with a baseline?

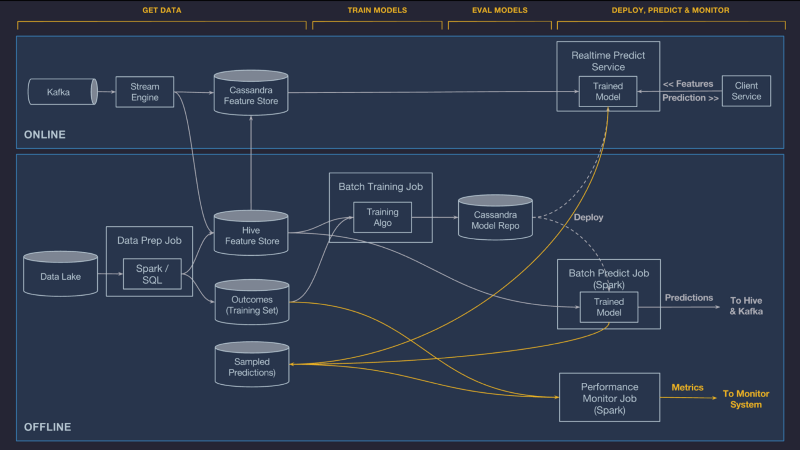

Deploying a model takes a lot of work, make sure to explore before you commit (source)

When starting on a project, the first priority is learning about what potentially unforeseen challenges will stand in your way. Even if they will not be the final version of your model, baselines allow you to iterate very quickly, while wasting minimal time. Below are a few reasons why.

A baseline will take you less than 1/10th of the time, and could provide up to 90% of the results.

Multiply your productivity by 9!

Here is a very common story: a team wants to implement a model to predict something like the probability of a user clicking an ad. They start with a logistic regression and quickly (after some minor tuning) reach 90% accuracy.

From there, the question is: Should the team focus on getting the accuracy up to 95%, or should they solve other problems 90% of the way?

Most state-of-the-art models still leave you with non-zero error rates due to their stochastic nature. This depends on your use case, but most system efficiencies are multiplicative in nature, meaning you’d rather have 10 successive steps at 90% accuracy (leading to 34% overall accuracy), rather than one at 99%, followed by 9 at 10% (0.00000000099%% overall accuracy).

Starting with a baseline lets you address the bottlenecks first!

A baseline puts a more complex model into context

Usually there are three levels of performance you can easily estimate:

- The trivially attainable performance, which is a metric you would hope any model could beat. An example of this value could be the accuracy you would get by guessing the most frequent class in a classification task.

- Human performance, which is the level at which a human can accomplish this task. Computers are much better than humans at some tasks (like playing Go) and worse at others (like writing poetry). Knowing how good a human is at something can help you set expectations ahead of time for an algorithm, but since the human/computer difference varies widely by field, it may require some literature search to calibrate.

- The required to deploy performance, which is the minimal value that would make your model suitable for production from a business and usability standpoint. Usually, this value can be made more attainable by smart design decisions. Google Smart Reply, for example, shows three suggested responses, significantly increasing their chances of showing a useful result.

What is missing from those levels is something I would call “reasonable automated performance", which represents what can be attained by a relatively simple model. Having this benchmark is vital in evaluating whether a complex model is performing well, and enables us to address the accuracy/complexity tradeoff.

Many times we find that baselines match or outperform complex models, especially when the complex model has been chosen without looking at where the baseline fails. In addition, complex models are usually harder to deploy, which means measuring their lift over a simple baseline is a necessary precursor to the engineering efforts needed to deploy them.

Baselines are easy to deploy

By definition, baseline models are simple. They usually consist of relatively few trainable parameters, and can be quickly fit to your data without too much work.

This means that when it comes to engineering, simple models are:

- Faster to train, giving you very quick feedback on performance.

- Better studied, meaning most of the errors you encounter will either be easy bugs in the model, or will highlight that something is wrong with your data.

- Quicker for inference, which means deploying them does not require much infrastructure engineering, and will not increase latency.

Once you’ve built and deployed a baseline model, you are in the best position to decide which step to take next.

What to do after building a baseline model?

Is it time to bring out some research papers yet? (source)

As mentioned above, your baseline model will allow you to get a quick performance benchmark. If you find that the performance it provides is not sufficient, then inspecting what the simple model is struggling with can help you choose a next approach.

For example, in our NLP primer, by inspecting our baseline’s errors, we can see that it fails to separate meaningful words from filler words. This guides us to use a model that can capture this sort of nuance.

A baseline helps you understand your data

If a baseline does well, then you’ve saved yourself the headache of setting up a more complex model. If it does poorly, the kind of mistakes it makes are very instructive as to the biases and particular problems with your data. Most issues with machine learning are solved by understanding and preparing data better, not by simply picking a more complex model. Looking at how a baseline behaves will teach you about:

- Which classes are harder to separate. For most classification problems, looking at a confusion matrix will be very informative in learning which classes are giving your model trouble. Whenever performance is particularly poor on a set of classes, it is worth exploring the data to understand why.

- What type of signal your model picks up on. Most baselines will allow you to extract feature importances, revealing which aspects of the input are most predictive. Analyzing feature importance is a great way to realize how your model is making decisions, and what it might be missing.

- What signal your model is missing. If there is a certain aspect of the data that seems intuitively important but that your model is ignoring, a good next step is to engineer a feature or pick a different model that could better leverage this particular aspect of your data.

A baseline helps you understand your task

Beyond learning from your data, a baseline model will allow you to see which parts of your inference are easy, and which parts are hard. In turn, this allows you to explore in which direction you should refine your model for it to address the hard parts better.

For example, when trying to predict the chance of a given team winning in Overwatch, Bowen Yang started with a logistic regression. He quickly noticed that prediction accuracy drastically improved after the game was more than halfway done. This observation helped him to decide on his next modeling choice, an embedding technique that allowed him to learn from a priori information, which boosted accuracy even before the first minute of the match.

Most machine learning problems follow the “no free lunch” theorem: there is no one-size-fits-all solution. The challenge resides in picking from a variety of architectures, embedding strategies, and models to determine the one that can best extract and leverage the structure of your data. Seeing what a simple baseline struggles to model is a great way to inform that decision.

As another example, when trying to segment cardiac MRIs, Chuck-Hou Yee started with a vanilla U-net architecture. This led him to notice that many of the segmentation errors made by his model were due to a lack of context awareness (small receptive field). To address the problem, he moved to using dilated convolutions, which improved his results significantly.

When a baseline just won’t cut it

Finally, for some tasks, it is very hard to build an effective baseline. If you are working on separating different speakers from an audio recording (the cocktail party problem), you might need to start with a complex model to get satisfying results. In these cases, instead of simplifying the model, a good alternative is to simplify the data: try to get your complex model to overfit to a very small subset of your data. If your model has the expressive power required to learn, it should be easy. If this part proves hard, then it usually means you want to try a different model!

Conclusion

It’s easy to overlook simple methods once you are aware of the existence of more powerful ones, but in machine learning like in most fields, it is always valuable to start with the basics.

While learning how to apply complex methods is certainly a challenge, the biggest challenge faced by machine learning engineers is deciding on a modeling strategy for a given task. That decision can be informed by trying a simple model first; if its performance is lackluster, move to a more complex model that would be especially good at avoiding the specific mistakes you’ve seen your baseline make.

At Insight, this is an approach that we’ve seen collectively save thousands of hours for our Fellows, and for the teams that we work with. We hope that you find it useful as well, feel free to reach out to us with any questions or comments!